Compliant Residual DAgger

Improving Real-World Contact-Rich Manipulation with Human Corrections

Xiaomeng Xu* Yifan Hou*

Chendong Xin

Zeyi Liu

Shuran Song

Stanford University

Neural Information Processing Systems (NeurIPS 2025)

Human-to-Robot Workshop (CoRL 2025) Best Paper Award

1x

1x

1x

1x

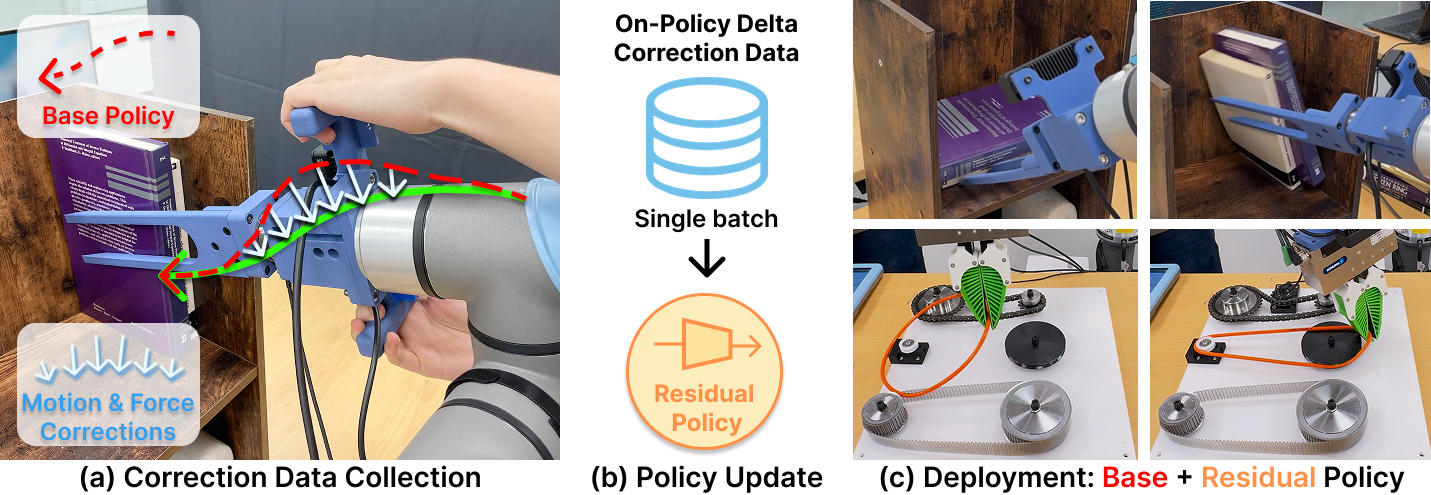

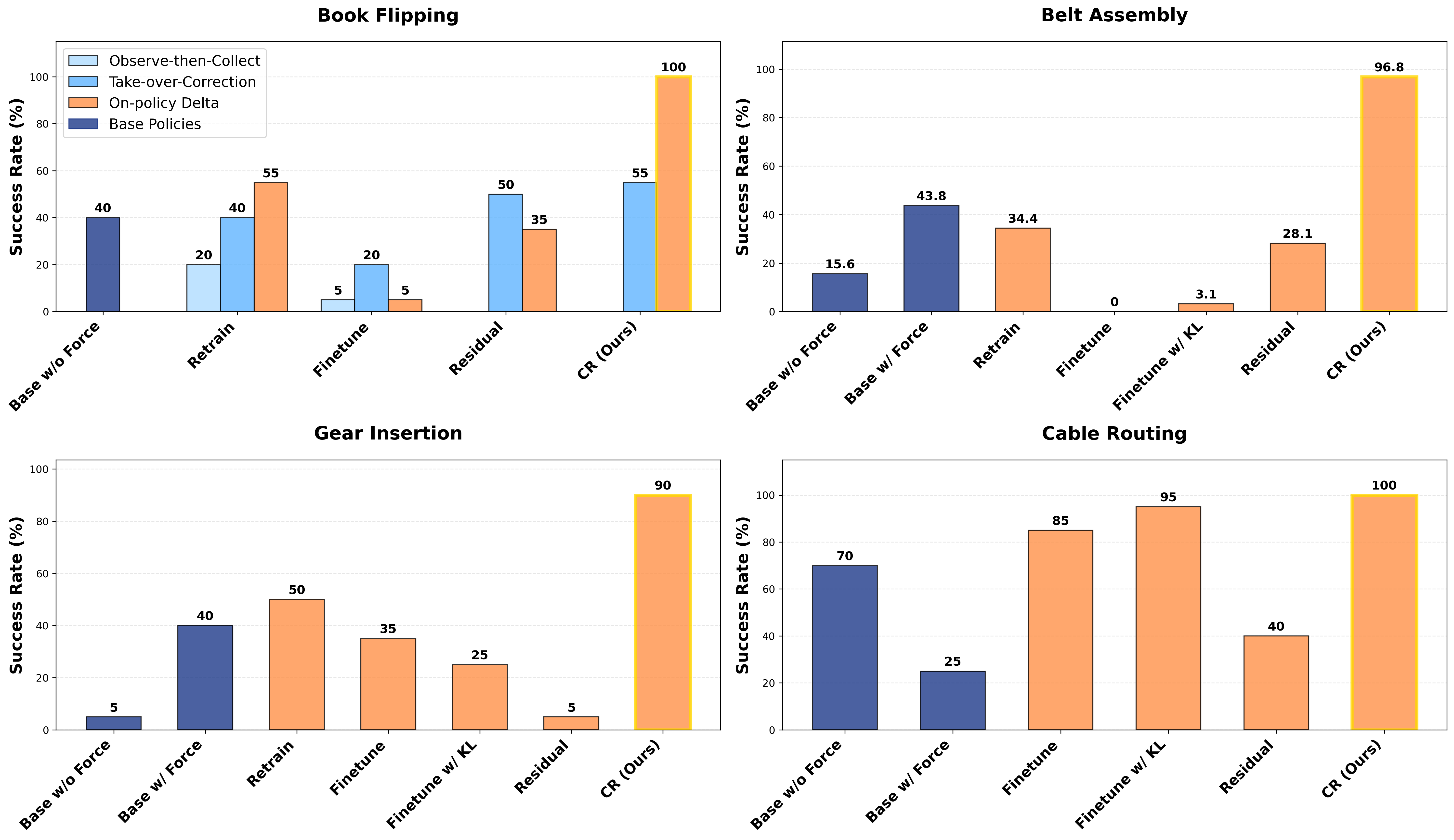

We address key challenges in Dataset Aggregation (DAgger) for real-world contact-rich manipulation: how to collect informative human correction data and how to effectively update policies with this new data. We introduce Compliant Residual DAgger (CR-DAgger), which contains two novel components: 1) a Compliant Intervention Interface that leverages compliance control, allowing humans to provide gentle, accurate delta action corrections without interrupting the ongoing robot policy execution; and 2) a Compliant Residual Policy formulation that learns from human corrections while incorporating force feedback and force control. Our system significantly enhances performance on precise contact-rich manipulation tasks using minimal correction data, improving base policy success rates by over 50% on four challenging tasks (book flipping, belt assembly, gear insertion, and cable routing) while outperforming both retraining-from-scratch and finetuning approaches. Through extensive real-world experiments, we provide practical guidance for implementing effective DAgger in real-world robot learning tasks.

System Overview

To improve a robot manipulation policy, we propose a compliant intervention interface (a) for collecting human correction data, and use this data to update a compliant residual policy (b), and thoroughly study their effects by deploying the updated policy on two contact-rich manipulation tasks in the real world (c).

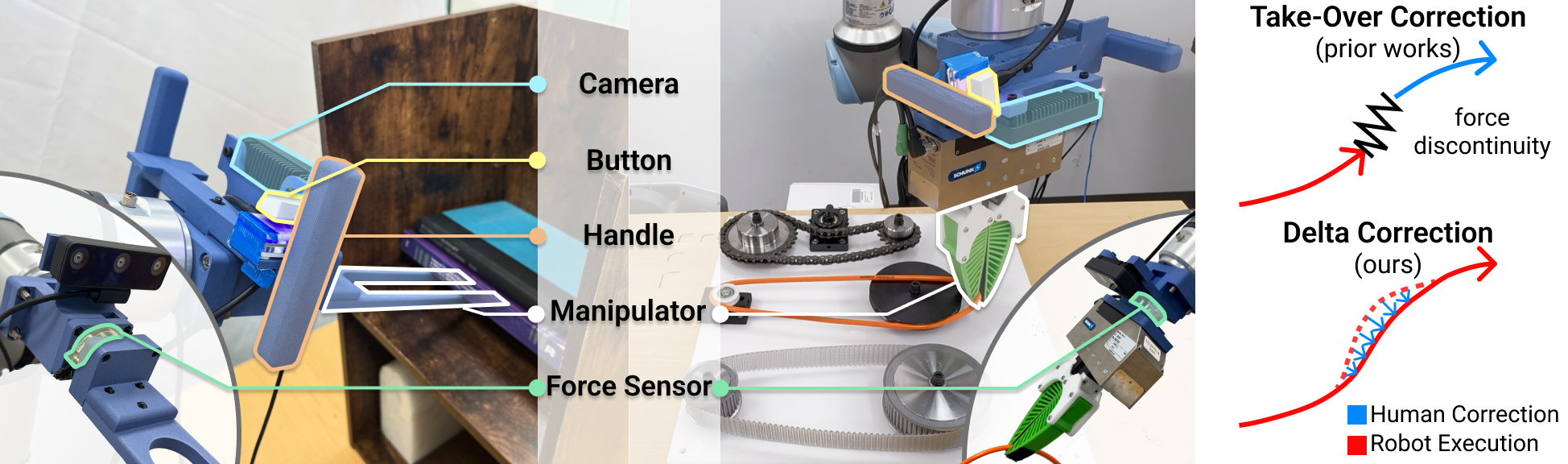

Compliant Intervention Interface

1x

2x

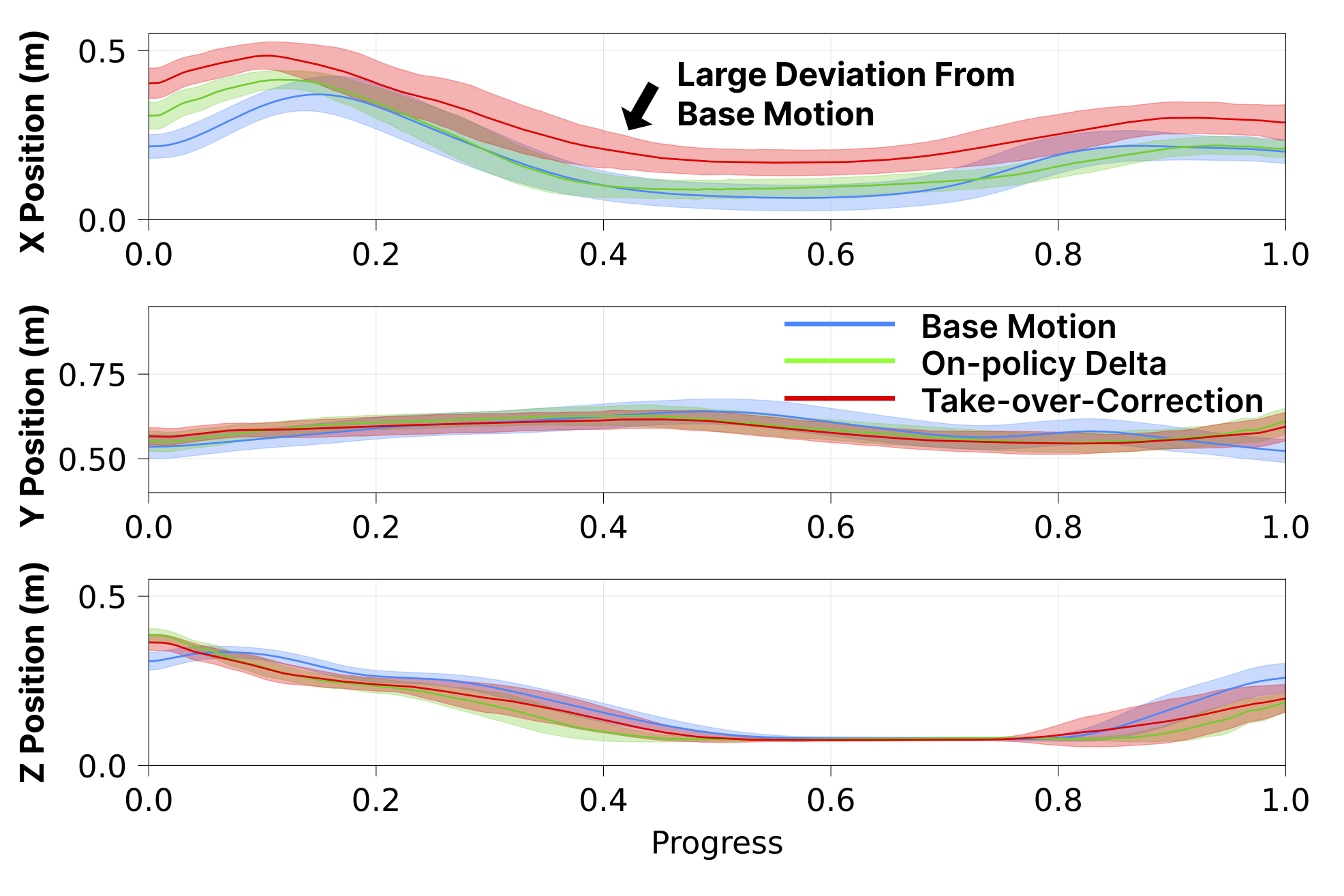

Here shows the distribution of fingertip trajectories across all dimensions in base policy training data, [On-Policy Delta] and [Take-Over-Correction] data. [On-Policy Delta] data's distribution is better aligned with base policy training data's distribution than [Take-Over-Correction] data.

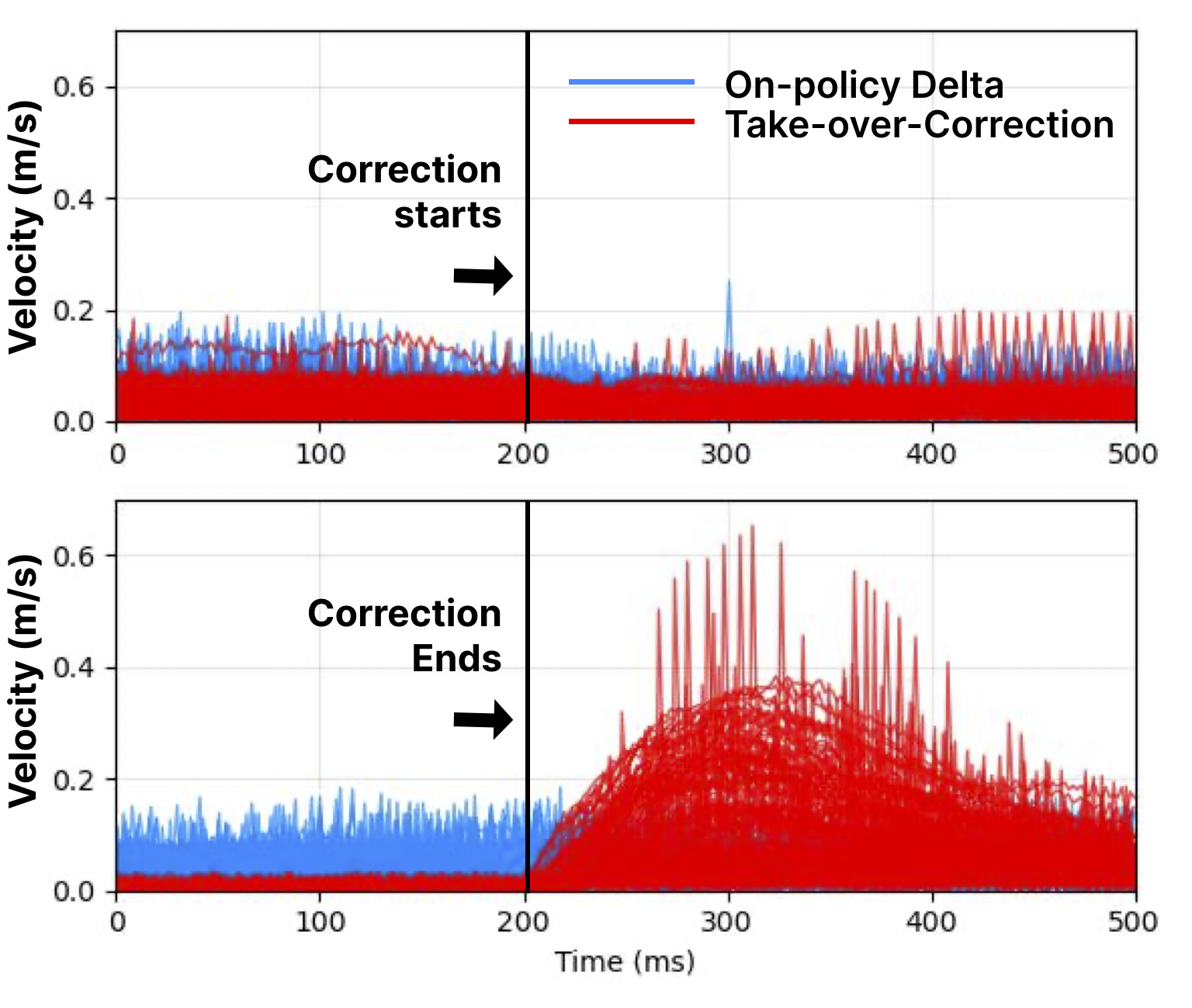

Here compares velocity magnitude within 1.5s of the corrections starts/ends. [On-Policy Delta] velocity magnitudes are smaller and more consistent, [Take-Over Correction] has notably larger magnitude and variations, demonstrating that [On-Policy Delta] encourages smoother trajectories.

Findings & Results

Finding 1: Compliant Residual Policy improves base policy by a large margin

3x

3x

1x

3x

4x

4x

4x

1x

1x

1x

1x

Finding 2: Residual policy allows additional useful modality to be added during correction

3x

3x

4x

4x

Finding 3: Smooth On-Policy Delta data makes training more stable

1x

3x

4x

4x

Finding 4: Retraining base policy is stable but learns correction behavior slowly

3x

3x

4x

4x

Finding 5: Finetuning base policy is unstable

3x

3x

4x

4x

Paper

Neural Information Processing Systems (NeurIPS 2025): arXiv:2506.16685 or hereExtended Version: here

Citation

@article{xu2025compliant,

title={Compliant Residual DAgger: Improving Real-World Contact-Rich Manipulation with Human Corrections},

author={Xu, Xiaomeng and Hou, Yifan and Liu, Zeyi and Song, Shuran},

journal={arXiv preprint arXiv:2506.16685},

year={2025}

}